import numpy as np

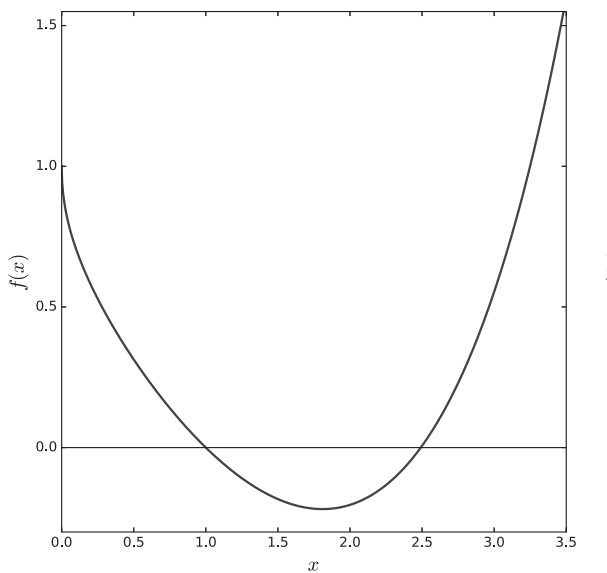

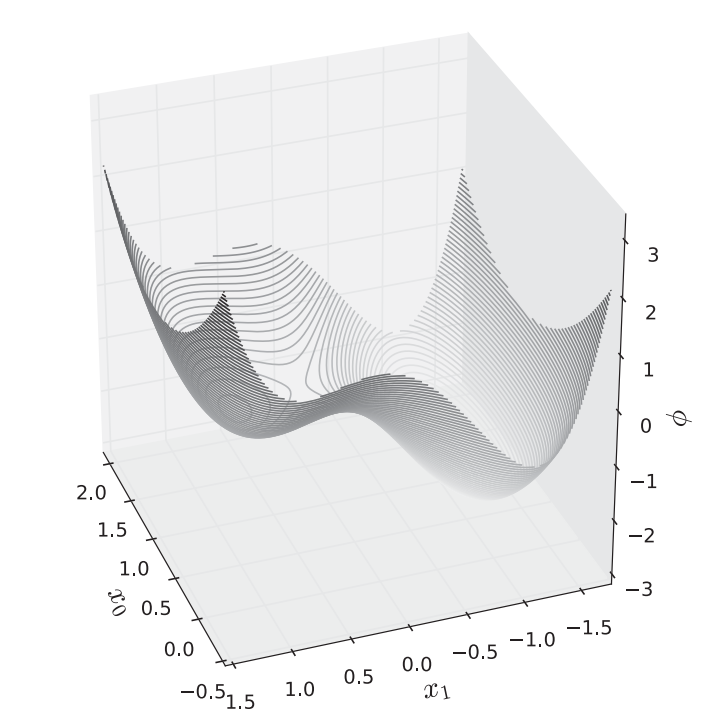

def phi(xs):

x0, x1 = xs

return x0**2 - 2*x0 + x1**4 - 2*x1**2 + x1

def gradient(phi,xs,h=1.e-6):

n = xs.size

phi0 = phi(xs)

Xph = (xs*np.ones((n,n))).T + np.identity(n)*h

grad = (phi(Xph) - phi0)/h

return grad

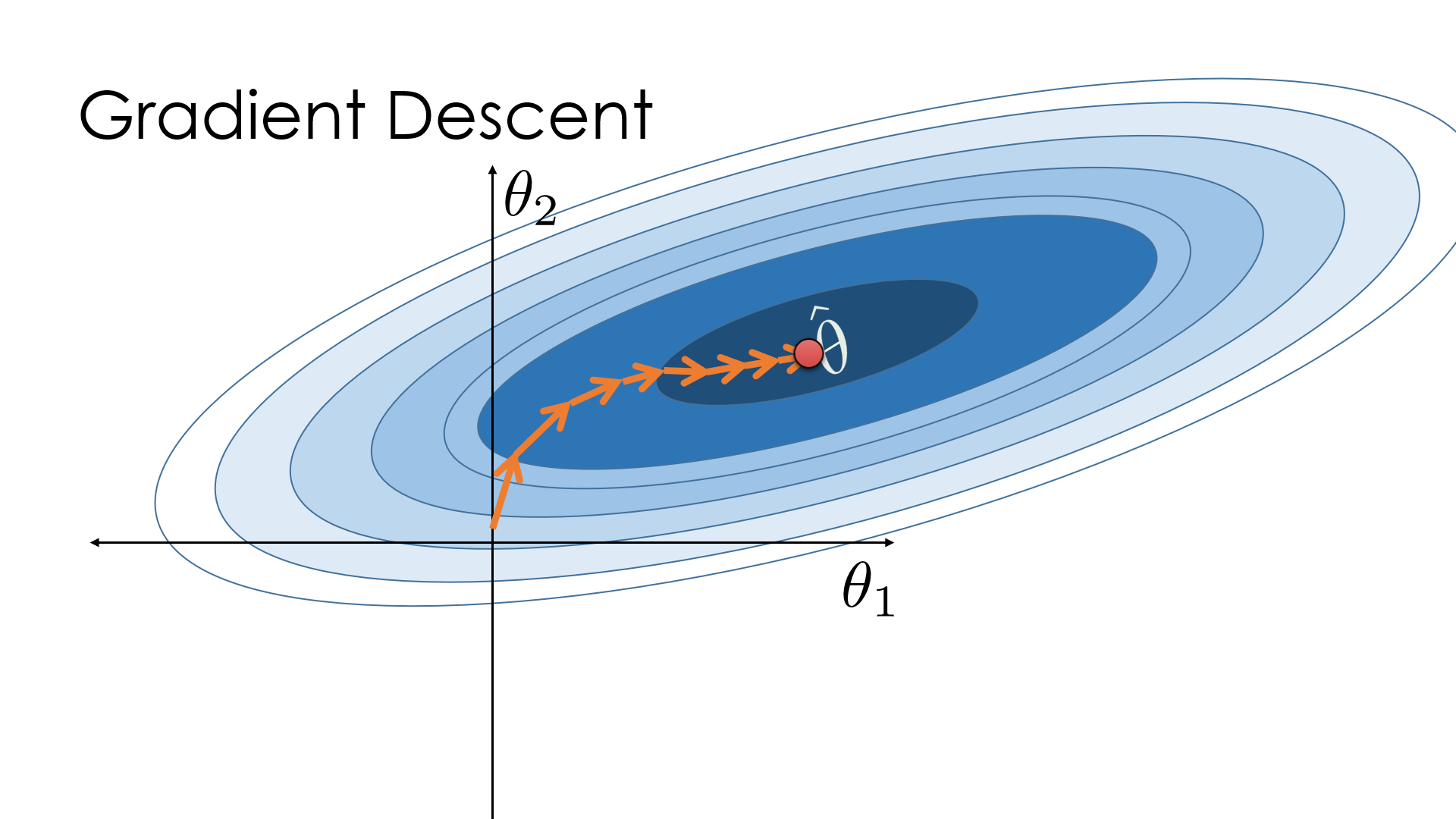

def descent(phi,gradient,xolds,gamma=0.15,kmax=200,tol=1.e-8):

for k in range(1,kmax):

xnews = xolds - gamma*gradient(phi,xolds)

err = termcrit(xolds,xnews)

print(k, xnews, err, phi(xnews))

if err < tol:

break

xolds = np.copy(xnews)

else:

xnews = None

return xnews

def termcrit(xolds,xnews):

errs = np.abs((xnews - xolds)/xnews)

return np.sum(errs)

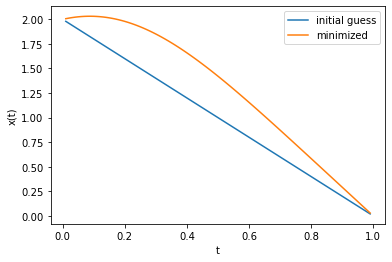

if __name__ == '__main__':

xolds = np.array([2.,0.25])

xnews = descent(phi, gradient, xolds)

print(xnews)1 [1.69999985 0.24062524] 0.21543067857184484 -0.38182351336797915

2 [1.48999974 0.22664124] 0.20264073065897387 -0.6333530209502826

3 [1.34299967 0.20564122] 0.21157625945177916 -0.7594983275463574

4 [1.24009962 0.17380848] 0.2661256195506861 -0.8280498618293368

5 [1.16806958 0.12494345] 0.45276305522029103 -0.8777871985954815

6 [1.11764856 0.04873952] 1.608607274900684 -0.9421647380026318

7 [ 1.08235384 -0.07208595] 1.7087398591446958 -1.0756695552415236

8 [ 1.05764754 -0.26511247] 0.7514526354432409 -1.3974185387806914

9 [ 1.04035313 -0.56299971] 0.5457308766418241 -2.0948395460096236

10 [ 1.02824704 -0.94372756] 0.41520335042339795 -2.9309660540915905

11 [ 1.01977278 -1.15566205] 0.19169789499758141 -3.0426740826911614

12 [ 1.01384079 -1.0729902 ] 0.08289908836262229 -3.0499045321707055

13 [ 1.00968841 -1.12557975] 0.05083473301209256 -3.054234380060766

14 [ 1.00678173 -1.09531014] 0.03052274820652257 -3.05538233943229

15 [ 1.00474706 -1.11406803] 0.018862352410894102 -3.05589334211737

16 [ 1.00332279 -1.10287596] 0.011567627294141984 -3.056063921191618

17 [ 1.00232581 -1.1097221 ] 0.007163907055812726 -3.056132246972263

18 [ 1.00162791 -1.10559374] 0.004430823076617533 -3.056157117969501

19 [ 1.00113939 -1.10810556] 0.0027547414719851855 -3.0561667943226265

20 [ 1.00079742 -1.10658539] 0.0017154484765218943 -3.0561704829239886

21 [ 1.00055805 -1.10750841] 0.0010726689437897882 -3.0561719231405475

22 [ 1.00039048 -1.10694906] 0.0006728037904015246 -3.056172494841105

23 [ 1.00027319 -1.10728843] 0.0004237463185425745 -3.056172722103985

24 [ 1.00019108 -1.10708268] 0.00026793975693115976 -3.0561728168266145

25 [ 1.00013361 -1.10720748] 0.00017018001506541433 -3.0561728552582403

26 [ 1.00009337 -1.1071318 ] 0.00010858056296563575 -3.0561728723063135

27 [ 1.00006521 -1.1071777 ] 6.961331368515191e-05 -3.0561728792903975

28 [ 1.0000455 -1.10714986] 4.485147212742855e-05 -3.0561728826380756

29 [ 1.0000317 -1.10716674] 2.9044432169820975e-05 -3.056172883986762

30 [ 1.00002204 -1.10715651] 1.8904903190473045e-05 -3.0561728846981224

31 [ 1.00001528 -1.10716272] 1.236866979687839e-05 -3.056172884967764

32 [ 1.00001054 -1.10715895] 8.133575043645464e-06 -3.0561728851287384

33 [ 1.00000723 -1.10716123] 5.37538599036186e-06 -3.056172885182245

34 [ 1.00000491 -1.10715985] 3.5698772250060277e-06 -3.0561728852203363

35 [ 1.00000329 -1.10716069] 2.3819480385739737e-06 -3.056172885230078

36 [ 1.00000215 -1.10716018] 1.5963972365386816e-06 -3.056172885239327

37 [ 1.00000136 -1.10716049] 1.0743651801166516e-06 -3.0561728852405894

38 [ 1.0000008 -1.1071603] 7.259538200711832e-07 -3.0561728852428383

39 [ 1.00000041 -1.10716041] 4.923441634847997e-07 -3.0561728852427184

40 [ 1.00000014 -1.10716035] 3.3505999120764203e-07 -3.0561728852432424

41 [ 0.99999995 -1.10716039] 2.287712532568859e-07 -3.0561728852430368

42 [ 0.99999981 -1.10716036] 1.564959858869905e-07 -3.0561728852431402

43 [ 0.99999972 -1.10716038] 1.0724305579788708e-07 -3.056172885243015

44 [ 0.99999965 -1.10716037] 7.378388107643756e-08 -3.0561728852430234

45 [ 0.99999961 -1.10716037] 5.101074560430894e-08 -3.056172885242959

46 [ 0.99999957 -1.10716037] 3.5236294141767044e-08 -3.0561728852429515

47 [ 0.99999955 -1.10716037] 2.431386477902596e-08 -3.056172885242921

48 [ 0.99999954 -1.10716037] 1.6863918806819815e-08 -3.0561728852429133

49 [ 0.99999953 -1.10716037] 1.1653038863784987e-08 -3.056172885242899

50 [ 0.99999952 -1.10716037] 8.088151816259404e-09 -3.0561728852428938

[ 0.99999952 -1.10716037]